Background

In Intervention 1, I found that when brands adhere to certain boundaries while using AI tools, they can enhance efficiency while mitigating the negative effects on emotional connection with consumers. Building on this finding, I designed Intervention 2 to identify which visual elements most significantly affect consumers’ emotional acceptance and to define the emotional boundaries for brands using AI-generated content.

Key Dimensions (based on literature & case studies)

- Transparency of AI Use

Recent industry reports highlight that basic disclosure of AI use in advertising is no longer enough; audiences increasingly expect brands to explain how and why AI is used in order to build trust and understanding (Author, Year).

Boundary recommendation: Clearly label AI-generated content and highlight human creators’ involvement to maintain brand credibility. - Avoiding Replacement of Core Human Figures

In August 2025, Vogue’s issue featuring fully AI-generated models sparked strong opposition and subscription cancellations (Gut, 2025).

Boundary recommendation: While AI can assist in background generation and composition, key figures such as models or ambassadors should remain human to preserve emotional bonds and artistic value. - Avoiding Over-Perfection and Eeriness

When AI-generated images appear overly perfect or lack human traits, they often induce a sense of eeriness, reducing perceived warmth and trust (Gu et al., 2024).

Although around three-quarters of advertisers already reveal when AI is used in their campaigns, disclosure alone is no longer enough. Audiences want to understand the reasoning behind AI use—how it works, why it was chosen, and what benefits it provides. Explaining the role of AI helps reduce confusion, build understanding, and strengthen consumer trust. Simply labelling content as AI-generated is insufficient without offering context Maintain slight imperfections and realistic textures to avoid an overly artificial or inhuman appearance.

Experiment Design: Testing Emotional Boundaries of AI Content

Objective

To identify which factors help preserve or diminish consumers’ emotional connection and perceived brand congruence in the context of AI-generated visual content.

Observation Framework

This experiment focuses on three psychological stages to capture emotional responses:

- Initial Impression: Preferences without knowing AI involvement;

- Instant Reactions: Emotional responses to varying AI usage (e.g., AI background/model/styling);

- Post-Disclosure Attitude: Reevaluation after transparency about AI involvement is provided.

In order to more systematically identify these emotional boundaries, this experiment not only compared the differences in the degree of AI intervention, but also specifically focused on the emotional fluctuations of the participants during the process of “not knowing AI intervention → learning about AI intervention”. This two-stage assessment helps to reveal how transparency alters the evaluation framework of consumers (framing effect).

Hypotheses (H):

- H1: Transparency in AI usage enhances emotional trust and acceptance.

- H2: Full AI model replacement weakens emotional connection more than real model + AI background/styling.

- H3: Over-perfection or eeriness significantly lowers warmth and authenticity scores.

Participants

- N ≥ 18, diverse in gender, age, and fashion interest to ensure generalizability;

- Partial overlap with Intervention 1 participants to compare attitude changes;

- Designed for representativeness and external validity.

Procedure

Full questionnaire and flowchart

https://24042236.myblog.arts.ac.uk/files/2025/08/Al_VisualsEmotionalConnectionTest12.docx

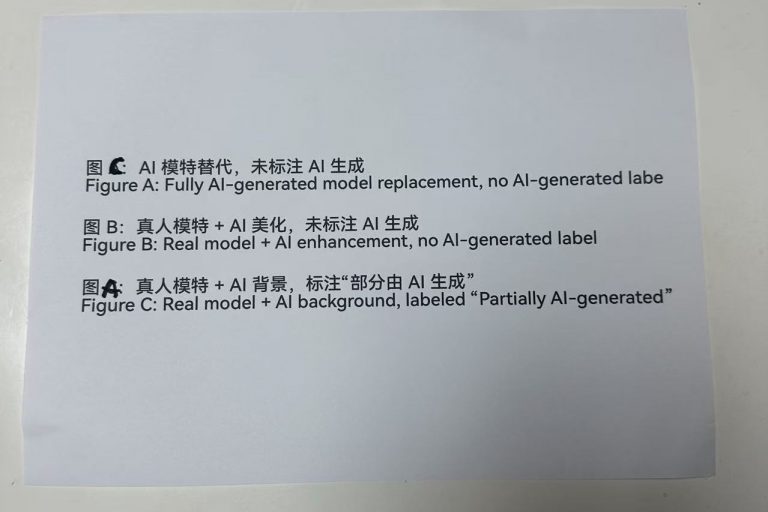

Participants are shown three sets of brand visuals (A, B, C), representing:

- Real model + AI-generated background

- Real model + AI-adjusted colouring

- Fully AI-generated model with no disclosure

Steps:

- First round: Participants rate images on realism, warmth, brand fit, emotional connection;

- Then, AI usage details for each image are disclosed (e.g., model replacement, disclosure status);

- Second round: Participants re-evaluate and rescore;

- Final task: Rank key emotional influence factors and indicate acceptable AI usage scenarios.

References :

Gu, C., Jia, S., Lai, J., Chen, R. and Chang, X. (2024) ‘Exploring Consumer Acceptance of AI-Generated Advertisements: From the Perspectives of Perceived Eeriness and Perceived Intelligence’, Journal of Theoretical and Applied Electronic Commerce Research, 19(3), pp. 2218–2238. Available at: https://www.mdpi.com/0718-1876/19/3/108 (Accessed: 17 August 2025).

Gut, S. (2025) ’Vogue US faces backlash over Guess ad featuring AI-generated model’, FashionNetwork, 1 August. Available at: https://uk.fashionnetwork.com/news/Vogue-us-faces-backlash-over-guess-ad-featuring-ai-generated-model,1754906.html (Accessed: 17 August 2025).

Williamson, D.A. (2024) New research: The AI Ad Gap. Sonata Insights. Available at: https://www.sonatainsights.com/blog/new-research-the-ai-ad-gap (Accessed: 19 August 2025).

Leave a Reply